The Ever Changing Google Algorithm Updates: How to Rank in 2020

Pose the question “How to do SEO for my website?” to any group of digital marketers and you’ll probably be met with different responses.

For the most part, you can classify what digital marketers know into these three categories:

Outdated baseline processes,

Methods based on updated standards, or my favorite:

Unconfirmed and conflicting facts from different sources.

Which is understandable, actually.

Whenever the topic of SEO comes up, the main consideration that has always been constant in conversations is how quick the standards change.

And of course, the seemingly endless questions regarding how to adapt to these changes.

The good news? All these updates come from a single entity: Google. The company who dominates 87.96% of the Search Engine market (as of October 2019) composed of more than 300 other entities.

The not so good news? Google updates their algorithm, their standards, at an average rate of 500 times per year. Yeah, you read that right. That’s around 1-2 times in a day.

As a website owner or a digital marketer who relies on organic traffic for your own website or your client’s, it would be wise for you to:

Know what these updates do

Keep yourself updated whenever a new one is released

Don’t get me wrong, most of the updates that Google does to its algorithm does not break the internet. They’re mostly just upgrades or expansions to the current algorithm’s capabilities at the time they were released.

I did say “mostly”, though. There are some that change the SEO landscape for everybody.

However, whether they may be game-changing updates or just minor upgrades that fly under the radar of the personalities watching and waiting for these updates to roll out, having a clear understanding of what Google is trying to do and why they do it, is a very valuable prospect.

“Mind-Reading” Google

Contrary to popular belief, it’s not impossible to ‘get inside Google’s head’ and figure out why they do what they do. It’s actually pretty easy to understand them.

All you need to do is take a look at Google’s Vision and Mission Statement as a company, and it will all make sense.

Google’s Vision

“to provide access to the world’s information in one click.”

Google’s Mission

“to organize the world’s information and make it universally accessible and useful.”

These statements basically boil down into two main things: Ease of access and Relevance.

Google wants to provide its users the information that they are searching for, now. Like right now.While “ease of access” is easy to comprehend, relevance is a bit tricky due to how subjective it is.

It also begs the question: How does Google define relevance? What considerations does it use to decide whether my page is useful or not?

The right answer: A ton of different factors.

Let’s say you enter the search string: “How to do SEO in 2020”.

Google’s mission and vision statement means:

All the results that will be delivered to you in SERP will answer your question one way or another

All the results that can answer your question directly or has high relevance to your question will be on top of the SERP rankings

The content of all the pages on the first page will address your queries without you needing to look at another page (also known as ‘pogo sticking’)

The results on the first page are web page links that users who searched for the same thing clicked the most because they found it relevant to their queries (high CTR)

The results on the first page of your SERP are being linked to and referenced by other websites that are discussing the same topic.

The contents of the pages delivered to you are verified true and correct since they were created by verified experts, have high authority, and have evident trust signals.

These are but some of the observable aspects that influence how Google’s direction aligns with their corporate mission and vision.

There are many more, in fact there’s 200 known ranking factors. All of them being handled and managed by an algorithm in a process that takes but a quarter of a second.

Let me assure you that you don’t have to wrack your brain trying to figure out all the ranking factors that we’re all not aware of.

Having clear insights on how our primary search engine thinks, and what they’re trying to do is enough to understand and at times even predict what their algorithm is trying to do.

Because at the end of the day, Google’s number one priority is not Website Owners or Digital Marketers.

Their top priority is the satisfaction of their Customers.

With that said, let’s take a look at the Google Algorithm updates that made waves in the known SEO world.

The Legacy Google Algorithm Updates that You Should Know About

Not all algorithm updates are created equal. While some have major and long term effects on how web pages are ranked and the way we do SEO, others change only a small aspect of the whole ranking process.

In this section of our article, we’ll be reviewing the algorithm updates that matter to your website and how it defines its performance in the field of SEO.

The Google Panda Update

Launched: February 23rd, 2011

One of the first prominent algorithm updates was launched in February 2011 and was given the codename “Panda”.

It covered huge ground since it made a wide range of permanent changes to the way the algorithm decides ranking.

No, the name doesn’t pertain to the huge cuddly asian bear we’re all familiar withl. It was actually coined after the Engineer who made it: Biswanath Panda.

And back in those days, the website owners and digital marketers doing SEO hated his guts.Because Google launched the Panda update without any warning.

That said, Panda revolutionized how SEO was seen by everyone concerned about it online. It prompted website owners and digital marketers to turn their heads and pay attention.

It’s not a stretch to even say that Panda was a wake up call to all entities that existed online.

What Did Google Panda Change?

To understand what changed with this update, we need to look at how Google’s search engine used to work before it was implemented.

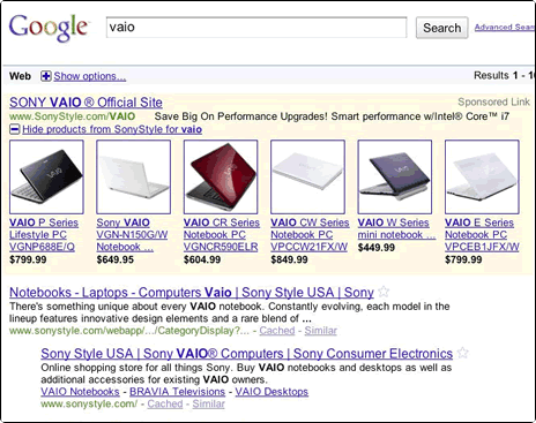

You see, back then it was all about speed and quantity: The faster you could produce a page that has content filled with the highest density of keywords, the higher your rank is.

It was a nightmare for content creators. Imagine filling your websites with pages that say this:

“Are you looking for cheap running shoes? If you’re looking for cheap running shoes, look no further. Our cheap running shoes website is the best place to order your new cheap running shoes. Feel free to check out our selection of cheap running shoes from our cheap running shoes selection below. Or check out our other offers for cheap running shoes from this link: www.cheaprunningshoes.com "

Looks bad, right?

After Panda was rolled out, contents with poor quality like our example above could never hope to see the light of SERP’s Top 10 anymore.

More than that, it also made changes into how links were built since only high-quality and relevant links would add value when it comes to SEO rankings.

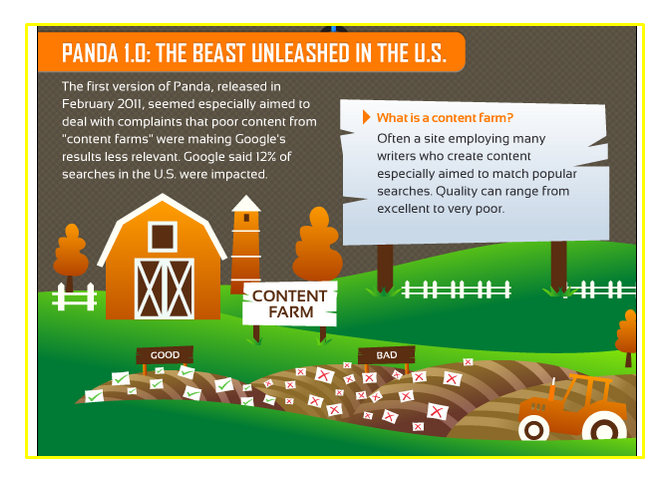

One of Panda’s biggest targets was Content Farms. These are websites that hire tons of writers to create content about anything and everything unders the sun.

What’s bad about these sites is the quality of content they produce isn’t consistent. Most articles are produced not by authors who are considered as experts in the topic but writers who piece content puzzles together.

Don’t get me wrong, content farms do have the capability to produce excellent content. But it’s mostly outnumbered by the low quality ones.

Either way, Panda 1.0 cracked down on pages with mediocre to bad content quality that the previous algorithm let slip through the cracks.

Yeah, you read that right: Panda 1.0.

The fluffy sounding algorithm update released in 2011 was the first of many versions that followed it.

In fact, there have been a minimum of 28 total updates with the most recent one released in May 2015.

I say minimum since there may have been minor tweaks here and there applied to the algorithm.

While we’re not going to go through all 28 updates that Panda went through, let’s highlight the major ones that made waves:

Panda 1.0

Like we mentioned earlier, the first Panda version targeted content farms.

Now this doesn’t mean all multi-author sites or online companies providing content creation services were hit. It really depends on the quality of the articles and/or copy that’s being produced.

High quality in-depth content that produces high engagement from readers as well as reference links in different platforms and pages online still continued to rank well.

In short, Panda 1.0 made sure that all contents being produced online are credible and aims to provide its readers with accurate information.

Panda 2.0

Released in April 2011, the second Panda version pointed its digital scope on international search queries.

Google UK, Google AU, as well as English queries in non-english versions of the search engine such as Google France and China became part of the algorithm’s coverage.

Google’s Search Quality officer Amit Singhal further reiterated their corporate mission in his statement saying: “Google focused on showing users the highest quality, most relevant pages on the web.”

Panda 2.1 - 2.3

The months of May up to July 2011 saw the launch of minor updates that incorporated additional signals to indicate site quality.

These signals saw to it that contents and pages that have low quality would be penalized, and rewarded those who worked their hardest in producing the best quality content with high organic traffic.

Panda 2.3

This update also focused on gauging the User Experience that websites provide to their customers.

This means that website owners and content creators that published articles and pages which resulted in high engagement rates and enhanced their site’s navigation saw benefits in their ranking as well.

Panda 2.4

This update which was released in August of 2011 affected anywhere from 6 to 9% of search queries globally. Michael Whitaker said that its main focus was to “Improve site conversions and engagement.”

Panda 3.0

This ultra powerful search filter was initially rolled out in October 27, 2011. It wasn’t until 5 days after when the official announcement from Google was released.

This update saw huge names in the online world like CNN and IGN ranking higher up in SERP.

Also, in the updates leading to 3.0, Google increased the frequency of their algorithm updates after Panda 2.5. This was also referred to as the Panda Flux.

With the competition raising the already high stakes further, website owners began feeling the pressure to produce better content and improve the engagement of their sites.

Panda 3.1

An update that was launched in November 2011, this Panda version affected about 1% of the total search queries. While you might think that 1% is insignificant, in comparison to the total amount of searches online, that’s at least a 7-figure number.

This minor update was a result of Google trying to improve the relevance of it’s search results further in order to provide its customers with useful information.

Panda 4.0

Website owners online thought it was unfair of Panda to prioritize the large sites that had huge opportunities to dominate the SERP rankings so when version 4.0 was released on May 2014, the algorithm targeted those large domains.

Their requirement: consistently create and publish high quality, relevant, and in-depth content.

And not just any content. These websites were required to create articles that add value, are interesting to readers, and directly rectify the issue that the customers are searching answers for.

All while being available to mobile devices.

Do you own a large website? Then be ready to deal with higher quality standards.

Panda 4.1

Saw Google cracking down again on websites that do keyword stuffing on their content. This small version update impacted 3-5% of the total search queries.

Panda 4.2

The last version in the Panda algorithm update was released in May 2015. This saw major gains for websites who worked the hardest to optimize their pages based on the standards that the algorithm was continuously throwing at them.

To summarize, you can consider Google’s Panda update to be the be all and end all of content quality standards. The intent of the search engine giant here was to bring down websites who rely on low-effort content.

As mentioned earlier, it was an eye opener to webmasters and digital marketers alike. The era of keyword stuffing has ended and Google is ushering a new age where content quality is the name of the game.

This update saw Google fighting against the marketers who use questionable and suspicious methods in ranking their pages. All in the goal of giving the customers of their search engine the best online user experience they could ever have.

The Page Layout/Above the Fold Google Algorithm Update

When Panda was released in 2011, a lot of people online got angry at the engineer it was named after since the general consensus was the algorithm update was released prematurely.

Google noted this reaction and by the time it rolled out its update early in 2012, the message became clear: When it comes to Customer User Experience, Google means business.

The Page Layout Algorithm has landed.

This major algorithm updates targeted websites that have too many static advertisements on top of its page. What I endearingly dubbed as the “Landing Screen”.

These ads force website visitors to scroll down once, sometimes two times, before they could behold the content they clicked on the page link in SERP to see

And that’s on computer browsers. Imagine visiting these sites on your smartphones.

Oh and in case you missed it, this algorithm isn’t targeting search queries. Its targeting Websites. 1% of all the websites existing online were affected, to be exact.

This Algorithm update was rolled out in four different dates:

Algorithm Launch: January 19, 2012

The first “Page Layout Algorithm” was launched, targeting websites that load Ads at the top of their web pages’ fold before the actual content.

For the next 9 months, website owners who were hit hard by this update were clamoring left and right and racking their brains to find a solution to the losses their pages suffered.

Google did not present any guidelines as to how those who were impacted could recover from the penalties given to them.

Most website owners did the right thing: Fixed their page layouts to prioritize their customers’ user experience.

In short: Google succeeded in its goal.

Algorithm Update: October 9, 2012

The affected sites found no reprieve until 9 months later when Google updated the algorithm.

This gave the websites who ‘repented’ by modifying their page layouts to improve user experience an opportunity to start recovering from their ‘penalties’.

The update also affected 0.7% of all known queries. Although what the effects were at that time were not specified.

Algorithm Refresh: February 6, 2014

Google refreshed the algorithm and updated its index early in 2014. However, Google’s spokesperson Matt Cutts didn’t mention any impact on search results as well as how it would affect page ranking.

Looks like it was simply what Google publicized it to be, a refresh.

The Algorithm Becomes Automated: November 1, 2016

During the Webmaster Hangout that Google held on the same date, John Mueller stated:

“That’s pretty much automatic in the sense that it’s probably not live, one-to-one. We just would recall this, therefore, we know exactly what it looks like. But it is something that’s updated automatically. And it is something where when you change those pages, you don’t have to wait for any manual update on our side for that to be taken into account.”

Thus it has become more evident that Google would automatically identify changes to your website after it gets crawled by the algorithm, and then adjust its rankings based on the results.

By 2017, Google’s Gary Illyes confirmed the relevance of the page layout algorithm and that it’s still a big deal to consider in page rankings.

The Google Penguin Algorithm Update

Now I know what you’re thinking so I will address this first: No, Google doesn’t have a software engineer named Penguin.

It’s not a similar naming method like what they did with the Panda Algorithm. In fact, no one knows where the name came from.

This update launched in April 2012 had its eyes set on one thing: Spam.

Specifically, the quality of the links providing ‘votes of confidence’ to web pages hence affecting their ranking in SERP.

This means site owners who have been buying links or acquiring them from link networks in order to beef up their page ranking will find themselves caught in the algorithm’s net.

Also, sites who have still persisted in saturating each of their pages with their focus keywords, or commonly known as Keyword Stuffing, highly risk to incur the wrath of Google’s ruthless pet Penguin.

Once again, Google was trying to send a message to all webmasters, website owners, and digital marketers: The Spam Sheriff is in Town.

Site owners were given an ultimatum:

Either clean up and free up their websites from suspicious, spammy, and unnatural links, as well as high keyword density pages, or face the consequences of never seeing the light of Google SERPs first page.

The Search Engine Giant was not entirely ruthless in its implementation. As long as website owners comply to disavow bad links from their sites, and create high quality content, then Google’s Pet Penguin will turn its gaze somewhere else.

Here are some key changes that defined the Penguin algorithm:

Google Penguin 1.1: Rolled out March 2012

More of a “refresh” rather than an update, this instance saw improvements in ranking for those websites who were initially hit hard by the algorithm when it first launched and have proactively taken steps in rectifying their site’s low link quality and high keyword density pages.

Google Penguin 1.2: Rolled out October 2012

Another data refresh that saw English language queries affected, whether they be from local English speaking countries or international Google search engines that have English queries.

Google Penguin 2.0: Rolled out May 2013

Mid 2013 saw a more advanced version of the Google Penguin algorithm being introduced to the online world.

This Penguin update saw 2.3% of all English queries impacted, including other languages at equivalent ratio.

This also marks the first Penguin update that scanned website home pages as well as top-level pages for presence of suspicious links that the site is benefiting from.

Google Penguin 2.1: Rolled out October 2013

A refresh that was rolled out for the 2.0 version later of the same year which saw 1% of the total search queries impacted.

In typical Google fashion, no specific explanation was offered for this version of the Penguin algorithm. Although, data gathered from results suggested that the internet “Sheriff” has been doing some advanced-level digging.

This time, instead of just crawling home pages and top level pages, it is now diving deep and searching every nook and cranny of a website to look for spammy links and high-density keywords.

Google Penguin 3.0: Rolled out October 2014

Now don’t let the whole number version fool you into wondering if this was a major update because it wasn’t. In fact, it was just another refresh of the algorithm.

However, it did allow those affected by the previous versions of Penguin to recover and emerge from the ruins they got buried into.

For the sites that continued suspicious and questionable link practices and website owners that deluded themselves into thinking that they had avoided Penguin’s radar unscathed, this was when they felt the cold slap of the algorithm’s fins hit them

These cycle of implementations and refreshes will continue on for the next two years. Until the final Penguin update was launched.

Google Penguin 4.0: Rolled out September 23, 2016

There are two significant changes to take note of in this versions:

Penguin became part of the core algorithm

Penguin evaluated websites and links in real-time

Before, it took an algorithm ‘refresh’ before websites felt the impact of being hit by the algorithm. Now site owners won’t need to wait.

For those who have been working hard in cleaning up their websites for spammy links and high keyword density pages, the algorithm rewards them with instant rewards in terms of search traffic and ranking in SERP.

The same principle goes, albeit an opposite effect, for those who are trying out questionable methods in gaining backlinks to their sites.

This final update also saw Google’s pet Penguin becoming a bit docile in handing out punishment.

During the previous versions, sites penalized get hit hard in rankings. With 4.0, it was just the links that were devalued.

Studies do show, however, that the algorithm penalties relating to backlinks are still in effect until today.

The EMD Filter

2012 had another key player running alongside other high-profile algorithms being launched for that year.

Though it's not really an algorithm update, in the sense of the word but more of a ‘filter’. It’s called EMD, which stands for Exact Match Domain.

It targets what its named after: Exact Match Domain Names.

It is worth clarifying that domains with exact match names aren’t being targeted exclusively. However, it has been found that sites with these naming conventions are mostly the ones utilizing spam tactics.

To specify: The EMD Filters out Exact Match Domains that have poor quality and thin content.

Here’s what’s prompted the creation of this filter:

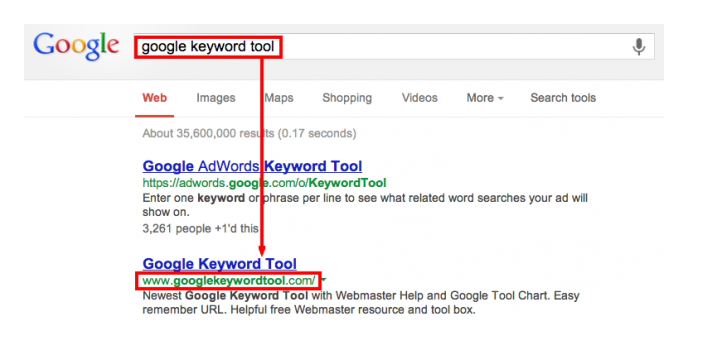

Digital Marketers would buy domains with exact match keyword phrases

From these domains a site would be built but since they have their main website to take care of, the content being produced in the pages within have very low quality.

These sites would then rank higher in SERP compared to more relevant ones. Only because it has an exact match keyword on its domain

Now more than a year before the filter was rolled out, Matt Cutts from Google already warned interested parties in the online world that future updates will be looking closely into these unfair methods and practices being done.

He detailed this in a webmaster video published on March 11, 2011:

The EMD update made several experts in the known SEO world question whether the use of exact match keywords in domains was wise knowing that we have a filter prowling about.

Data being compiled shows a very steep decline on domains with exact match keywords who formerly held rankings.

The consensus after the update? Overall: domains that aren’t dot-com were penalized more severely compared to the .com ones which were in essence, withstood the updates that occured.

EMD’s process wasn’t a one time thing, it was run by Google periodically in order to do two things:

Allow websites that took action to make their page contents better a chance to recover from the decline in rankings

Allow Google’s algorithm to catch the sites they missed during the last time the process was run

Remaining Myths and Misconceptions about the EMD Update

While Google has been adamant in its presentation of the qualifications that the EMD filter uses to evaluate websites, there were still doubts being presented by people who have interest in SEO.

Specifically the fact that having exact match keywords for domains could still prove valuable for a website.

One of the biggest myths circulating back then was the fact that Google went after all exact match domains.

While compiled results do look like it, and the fact that not all webmasters are actually purchasing domains with exact match keywords for malicious purposes, it does prove to be a very shaky prospect at best.

The Google Payday Loan Algorithm Update

2013 saw a major algorithm update that rocked the SEO world to its core.

And while it only impacted ony 0.3% of queries in the US, it was still significant enough that almost anyone whose someone in the SEO space was talking about it.

Its main target: Spam Queries mostly associated with shady industries and the websites that capitalize on them.

These include but are not limited to: Online Loan Sites with super high interests, Payday loans, Investment Scams, and Porn.

Matt Cutts, the Head Honcho of Google’s webspam team hinted about the impact of Payday being as high as 4% for Turkish queries. He specified further that these types of queries have a lot of spam associated with them.

He also stated that aside from payday loans and debt-consolidation related sites, Payday will also target heavily spammed websites that have to do with medicine, casinos, real estate, and insurance.

Google rolled out the Payday Loan Algorithm in three versions:

Payday Loan 1.0: June 2013

That time, not much was known about the first version aside from the fact that it targeted spam queries and the niches that use them in high density.

Payday Loan 2.0: May 2014

This update saw the algorithm implementing a link-based process focusing on high volume and CPC keywords that have high potential of spamming.

Payday Loan 3.0: June 2014

By this time, negative SEO was becoming a very real concern for webmasters and website owners. This is why Payday 3.0 included protection against negative SEO attacks.

Negative SEO is the malicious use of blackhat methods targeting competitor websites whose goals range from bringing down their ranking in SERPs to actually having the site penalized through the use of malevolent spam links.

Being an algorithm that identifies spam queries and links, Payday was in the right position to act as a shield for legitimate websites and protect them from this type of attack.

The Google Hummingbird Update

While this update was announced by Google in September 2013, it had actually been in place and running a good month before that.

Unlike Google Panda and Penguin who preceded it, the Hummingbird update was not just an update to an existing working algorithm. Rather, it was a totally new makeover of the core algorithm.

Also unlike its predecessors, instead of reporting losses in search traffic and ranking, it seems that the Hummingbird implementation resulted in gains.

It was then clear that this Algorithm has a positive influence on how accurate Google is when it comes to defining Search.

To better understand this core algorithm update, we’ll need to take a look at the two search engine features it had the most effects on:

Semantic Search

Knowledge Graph

Understanding Semantic Search

Back in 2010, SEO meant stuffing as much keywords and backlinks as you can in one page to be able to see it rank in SERPs (not just Google).

It wasn’t even as systematic as that. We had to look at pages that were already ranking, divine what makes it rank, and somehow reverse engineer that for our own pages.

For contrast, here’s how Google’s Search Engine worked before Semantic Search:

If in a conversation, you ask your friend “What is the fastest land animal in the world?” and then follow it up with the question “How fast is it?”, your friend will understand that you’re referring to the context of “it” as the fastest land animal which is the cheetah.

Well search search engines used to treat those queries as two separate things. That means it will give you web pages that would exactly match the keywords “How fast is it” for your second query.

With the birth of Semantic Search, and with the core changes brought upon by Google’s Hummingbird to the algorithm, ranking factors have greatly evolved.

And along it, our methods for optimizing our websites’ pages. Identifying Keywords to rank for will no longer suffice. We now need to understand what does keywords mean, and what’s the search intent behind them.

This is where Semantic Search comes in. It is the method that the algorithm uses to understand a language the same way any human being would.

In short, Hummingbird tries its best to understand the following:

The Intent of the person Searching

The context of the Query the person used in Searching

The relationship between the words used in the Query

Google utilizes Semantic Search to identify between different entities like people, places, things, or events.

The searcher’s intent is then identified based on a variety of factors:

Search history

Geographic location

Global search history

Variations in spelling

Let’s say you’ve been searching for horses in the past hour. A query containing the word “Mustang” would give you results about a free-roaming domestic horse breed instead of Ford’s ultra-sexy sports car.

The Knowledge Graph: Google’s Digital Census

Since Google’s objective is for its algorithm to understand its customers search intent as humans would, it needed to magnify the importance of context and entities above keywords strings.

Or as per Google’s own words: "Things, not strings."

And in 2012, the largest database of online public information was born: The Knowledge Graph.

This massive library is tasked with collecting and organizing data from all public domains online.

From the cast of all 11 Star Wars films, the fuel efficiency of a 1997 Honda Civic, to how many nautical miles the restricted airspace around Washington DC is, and a billion other information.

Google’s Knowledge Graph opened doors to a lot of opportunities for upcoming large-scale algorithm updates.

Circling Back to Hummingbird

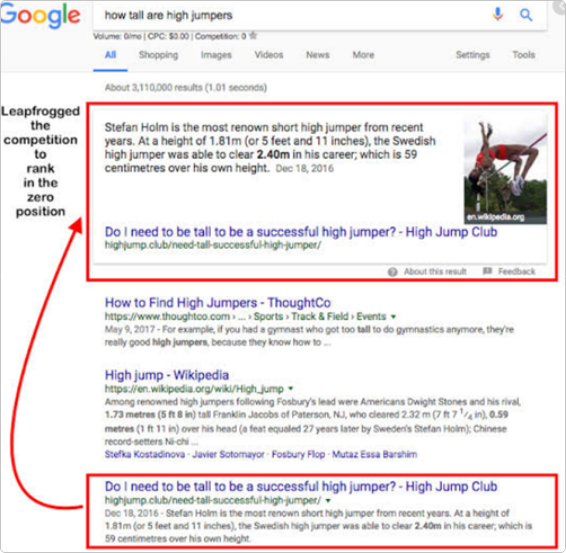

It is Hummingbird’s job to make sure that pages in SERP that match the meaning are ranked higher. Rather than pages matching a search queries words, but not its context.

The Google Pigeon Algorithm Update

July 2014 saw another bird joining the flock in Google’s Algorithm. The Pigeon, not a name officially given by Google but by Search Engine Land, was an update targeted at local search results.

Aptly named so, as Pigeons are known to fly home. A fitting monikker to for this local search algorithm update.

As per usual Google fashion, the core changes were well hidden behind the scenes. However, and as per usual SEO digital marketers, we observe the results and thus gain the insights needed to interpret what the changes mean.

Well Google did drop a couple of details regarding this update:

The new local search engine algorithm combines all the web search capabilities including their ranking signals and search features like the knowledge graph, spelling correction, similar keywords, etc.

The new algorithm improves on their distance and geographic location ranking factors.

Thus it applies mainly to Google Maps and Google Web search results with local search intent

In essence, the main goal of Pigeon is to offer better local search results by giving better visibility to local businesses that exude strong organic presence online.

Pigeon is not an update that addresses quality or spam like its predecessors. It’s also not a penalty-based algorithm that will ‘punish’ contents in search results with low quality. It’s a change in the actual ranking factors of the search algorithm.

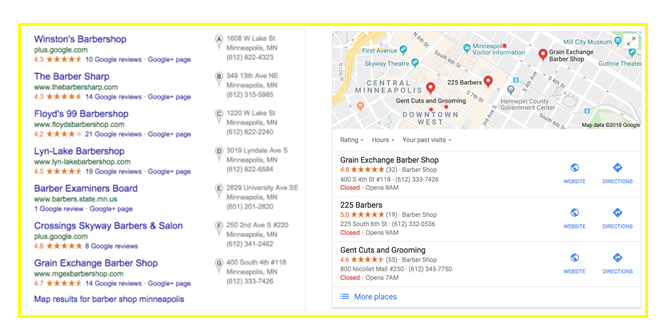

Pigeon and the “Google Packs”

While the Pigeon update made several notable changes as mentioned above, the most observable to date was its effects to the “Google Pack” features.

This pertains to the 7 local businesses that Google features if their search engine query reflects a local intent like a geographical keyword.

Back in its initial launch, the Google Pack featured 10 businesses on their list. This number saw a steady decline, until a year after Pigeon’s release when it was reduced further to 3 listings per pack.

This massively significant update that affects local businesses saw those who didn’t make it to the top 3 as being left out of the ranking. It also made ranking much harder since there are only 3 positions to vie for.

One Unintended Effect

Like many other Google updates, the roll out of the Pigeon algorithm changes had one unwanted result: Spam listings slipped through the cracks.

This further emphasized on the weakness of Google’s local search engine algorithm and made manual reporting of violations a necessity.

Another weakness exposed by the Pigeon algorithm update was Google’s lack of capability to differentiate between businesses that are legitimate vs. businesses edited to have exact match keywords in its domain.

Since local businesses of spammy nature kept slipping to the cracks, the legitimate businesses who were exerting their efforts to rank in the Google 3 pack found themselves being pushed out of the ranking as a result.

The Google Mobilegeddon Algorithm Update

This is quite possibly the most simple algorithm change ever released in the online world. But despite its simplicity, the impact it has to how Google looks at websites is immensely significant.

The Mobile-Friendly Update as Google called it, was rolled out in April 2015. It was immediately given several cheesy names by marketers: mobocalypse, mopocalypse, mobilepocalypse; the list goes on.

What eventually stuck was the name given by Search Engine Land’s Barry Schwartz: Mobilegeddon

Specifications of the Update

I mentioned earlier that the change brought upon by Mobilegeddon is incredibly simple. And it was: Your website was either mobile friendly or it isn’t.

One of the reasons that made it simple was how absolute it was. It’s black or its white, no gray areas.

It does merit asking the following questions:

What does Google mean by “Mobile Friendly”?

What constitutes “Mobile Friendliness” for Google?

In their post on the official Webmaster Central Blog, Google (thankfully, this time) briefly explained the update along with an image example to show what they meant by “Mobile Friendliness” in websites:

Google also clarified that this update has the following scope and limitations:

Only affects search rankings on mobile devices

Scope of mobile search results in all languages is global

Only applies to individual pages, not websites in its entirety

Ushering a New Era

I also mentioned earlier how immensely important Mobilegeddon was to the online world. And it is due to what it signified to digital marketers and website owners alike

Google’s message was clear: They’re trying to move the entire online market towards the direction they want.

And thus began the Era of Mobile-first indexing.

When this update was released, the majority of the online community of webmasters and digital marketers groaned collectively.

And for good reason: this meant that we would have to create a separate layout of our web pages that was meant for mobile browsing.

Don’t get me wrong, we don’t hate Google for making our lives harder than it already is. That’s a common misconception.

Rather, Google is trying its best to follow its mission statement by improving the experience for their customers as much as possible, while aligning it with market trends based on user behavior.

Mobilegeddon is not just about rankings and search traffic. More than that, it was Google responding to the behavioral trends of their consumers.

The RankBrain Algorithm Confirmed

With the advancements in search engine algorithms that Google has been rolling out one after another, and at a very fast rate, SEO people have started to wonder how the changes are identified, analyzed, and implemented.

Especially Google’s capability to read through search intent, which is a human aspect of behavior.

With an average daily search of 3.5 billion, this gargantuan process would either require massive inputs which would need huge manpower resources or one very powerful capability: Machine Learning.

And it was 2015 when the educated guesses of webmasters and digital marketers were confirmed by Google: RankBrain does exist.

What RankBrain Does

To best define what Rankbrain adds to the algorithm’s capabilities, we need to first look at another algorithm it is closely related to: Google’s Hummingbird.

As mentioned earlier in this article, Hummingbird defines keywords based on the contextual words supporting it in the same search query.

For this algorithm, the amount of times a keyword shows up on a single page is irrelevant. Hummingbird searches for the context of the content holistically on every page it crawls.

However, Hummingbird has one glaring weakness: It can only gauge context for keywords and queries it has seen before. It has the ability to learn new keywords but can’t define new search queries and word combinations.

And therein lies the challenge. Since 15% of all queries made daily are new to the algorithm.

This is where RankBrain comes in.

RankBrain handles the processing for unfamiliar and unique queries. What it does is relate new queries to existing searches in order to provide users search results that are more relevant.

Let’s say you used a search result that Google isn’t familiar with and it gave you irrelevant results. You’ll probably try different search queries that would finally take you to the answer you’re looking for, right?

RankBrain will take note of all the search queries you will be utilizing until you find the results you were looking for, including the results you clicked on in SERP. A ‘signal’ to the algorithm that these are part of your considerations.

It will then compile and organize these pages and mark them as relevant to your original search query.

The result: The next time you or other users search using your initial search query, a more relevant set of pages will be presented to you.

The Three Essential Concepts of RankBrain

Different queries would have different ranking signals

Before RankBrain, traditional ranking signals such as link quality, content quality, exact match keywords and partial match keywords, are some of the factors being assessed in terms of website optimization.

With the implementation of RankBrain, SEO people would now need to consider a new and essential aspect for ranking their pages’ contents: How to best provide what their users are searching for.

Ranking signals also affects the reputation of your website

Building your website’s reputation as an entity trusted by search engines and customers alike is one of the goals of doing SEO as well in order to provide a satisfactory user experience.

Ranking well for the keywords you are focusing on is beneficial to establishing this type of reputation.

Depending on the topic you cover, building reputation based on the freshness of information you present, how much your user engages with your content, and the diversity of the links that you are earning, are all essential factors that you would need to focus on.

As time passes, your website would need to build its reputation revolving around the signals it wants to be known for.

RankBrain helps in fostering an environment where your brand can become known for based on how you deliver the type of content that would satisfy your customer’s needs.

The concept of having single-focus keywords for each page is obsolete

After RankBrain, creating one page for every variation of a single keyword is akin to beating an already dead horse.

In fact, it may even tend to backfire since it would cause your own pages to compete against each other in SERP. Not to mention that the ranking strength each of these pages will bring to the table would be particularly weak against its modern counterparts.

This is due to the fact that RankBrain is trying to mimic how humans understand search intent. And we human beings don’t think in keywords. We think in topics.

The right way to do it after RankBrain? Create a page that focuses on topics, not Keywords. Then strengthen that page with as much supporting context as you can.

SEO people have been foreseeing this change for a couple of years before RankBrain even became a thing.

And with the confirmation of this Machine Learning System, the wisdom on focusing on creating comprehensive content from topics gleaned from keywords beamce more evident.

The Google Intrusive Interstitials Update

In case you find yourself new to the term “Intrusive Interstitials”, they go by a common name you’ll surely recognize: Popup Ads.

They embody everything wrong about creating engaging content and fostering a satisfactory user experience because:

They block most of the content of the page you’re viewing immediately after the page loads or interrupting your interaction with the page

You’ll have to dismiss the ad before being able to view the main content

They redirect you to another page by opening a new tab or a new window

They close the page you’re viewing by loading a new one on the same tab, or

Displaying ads that have nothing to do with the niche or the topic of the page you are viewing

Bottom line, they’re annoying. And by now you know how much Google Abhors anything that annoys their users.

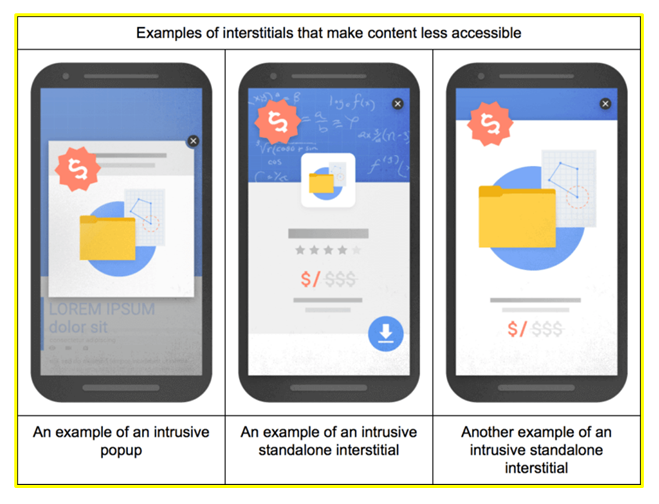

Here’s a diagram showing what intrusive Interstitials look like:

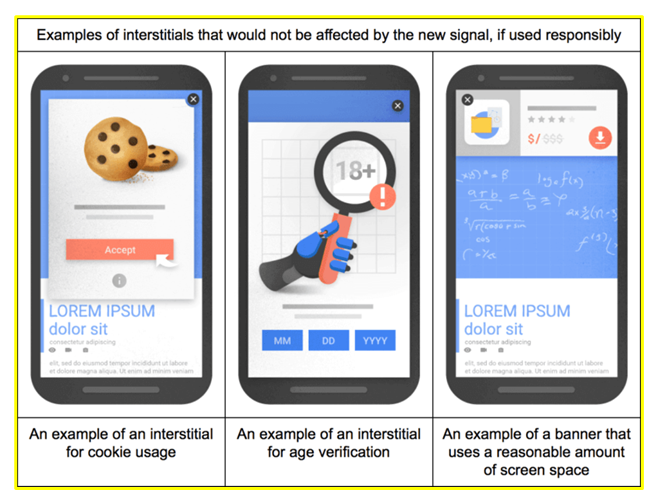

There are “good” interstitials though, which would not be affected by the new signal if used responsibly:

Response to legal obligation Interstitials. Like asking for permission to use cookies or to verify one’s age before viewing an age-sensitive content.

Sign in or login dialog boxes (also called lightboxes) whose contents are not indexed publicly. These contain fields for entering emails and passwords that should not be crawled by the algorithm.

Ad banners that use a small amount of screen space that is considered “reasonable” for users and are easily dismissible.

Here’s an image showing unintrusive interstitials:

The Google Fred Update

In relation to the Intrusive Interstitial Update, Google released another algorithm change. Dubbed as Fred, it caused widespread online panic to both digital marketers and webmasters alike.

The main reason that it made so much waves online was the fact that it saw a large amount of websites taking massive hits in search traffic seemingly overnight.

SEO people tried their best to assuage clients, since most of them saw as much as a 90% decline in online traffic.

Released unannounced in March of 2017, Fred cracked down on websites who have been prioritizing monetization and ignored user experience.

These are sites with heavy ad placements on their pages which is inversely related to how good the quality of their content is.

Which Sites Felt Fred’s Impact the Most?

Since Google would not confirm the specifics of their Fred update, SEO people started observing sites to identify who were affected most by the implementation.

Barry Schwartz from SEO Roundtable made a study of 100 websites that had a steep decline in web traffic, and they all shared two common denominators:

The sites were mostly content driven, like blog sites and video hosting sites.

The sites have very high density of ads, and most of them seem to be made for the purpose of generating income from online advertising

Part of this study also involved taking a look at those sites who were able to recover after they implemented changes into their website.

The changes implemented all involved one thing: Removing their Ads

This further confirms that Fred was cracking down on sites who are taking their customers’ experience for granted and prioritizing monetization through advertising.

The Maccabees Update

The Google Maccabees update was released back in December 2017 and it caused 20-30% decrease in organic search traffic.

The moniker was given by Search Engine Roundtable’s Barry Schwartz since the update was released during the Hannukah Jewish festival.

Maccabees targeted two main areas in the online space:

Keyword permutations

Low quality websites with spammy Ads

That probably brought back memories of the Google Fred update. Yes, Maccabees expands on the algorithm changes initiated through Fred.

Let’s look closely at how this algorithm update works.

What is Maccabees

In the mathematical sense, permutation means “arranging the members of a set into a sequence or order, or if the set is already ordered, rearranging its elements”.

And in SEO, using different keyword permutations on separate pages has been known as an old tactic commonly used by websites in travel, e-commerce, and real estate industries.

It has worked well in the past, especially since it will allow multiple pages to rank for virtually the same keyword covering all variations of a search query, especially for long tail keywords.

A page focusing solely on a long tail keyword would of course gain priority in SERP rankings, and having multiple pages across a wide range of keywords in your industry ensures coverage of keywords with high conversions.

Here are some examples of keyword permutations.

A travel site having separate pages targeting the following:

Cheap tours to Paris

Low cost Paris tours

Cheap tour packages to Paris

And then a website affiliated with the first one may have different pages targeting:

The best restaurants in Paris

Paris top 10 restaurants

Top 10 restaurants in Paris

Take note that the keywords being targeted by these pages are all long-tail keywords in nature which give each of them very high rates of conversion possibilities.

I did mention earlier that these techniques worked before. But it only worked for ranking in SERP. For customers encountering these pages, it's creating a very confusing and irrelevant user experience.

And Google hates anything that messes up the experience for their customers.

The solution to recover from being hit by Maccabees due to keyword permutations is easy, though: Stop creating multiple pages to rank, and start focusing on one ultra powerful high quality page for your keywords.

The Google Fred Extension

The other update that Maccabees brought upon the search engine’s algorithm was an extension to the existing Google Fred, an update that devastated websites a few months prior.

Caption: One of the sites with high Ad density and thin content quality that felt the impact of Google’s Fred update

Google Fred was a Google update that targeted black-hat tactics tied to aggressive monetization on websites. “Aggressive monetization” referred to an overload of ads, low-value content, and few user benefits. The majority of the websites affected had one (or more) of the following:

Fred’s main target were sites that practiced black-hat techniques in order to aggressively monetize all their pages, conversion or non-conversion.

By ‘aggressively monetizing’, I meant having an extremely high number of advertisements that disrupt user experience without rewarding the user with an equally high quality of content.

Maccabees is the last of the “Legacy Updates” we’ll be looking at in this article. For our next section, we’ll dive deep into algorithm updates that were released in 2019.

Recent Significant Algorithm Updates

Google’s Valentines Day Update

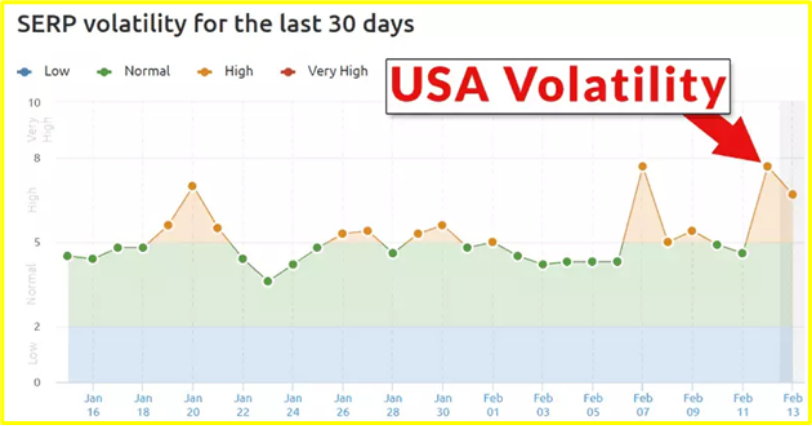

The second month in 2019 saw Google rolling out another update, which in usual Google fashion, was unannounced and unconfirmed.

Being released on February 13, it’s no wonder it would be known as Google’s “Valentines Update”. It’s effects though, was anything but cheesy.

What was the Impact of the update?

Since the Valentines update was both unconfirmed and unannounced by Google, speculation abounded back then. Several SEO personalities had their own theories and hypotheses as to what the update actually did.

The break came when a well-known Black Hat Facebook Group provided observations which aligned with SEMRush SERP Volatility indicators that concerned Mobile users in the US.

This was further reinforced by having similar results in SEMRush’s UK volatility graphs.

The General Consensus regarding this update was that:

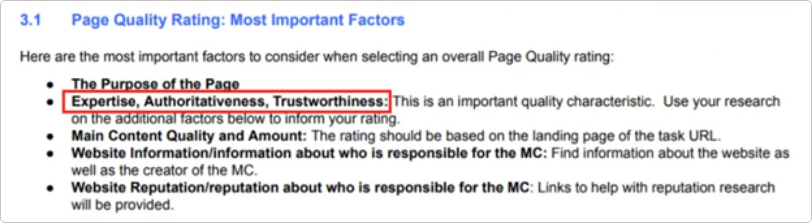

This update relied heavily on Google’s E-A-T guidelines

It judged pages and its content not just in quality but also relevance

It also looked at the quality of backlinks that are pointing to the site it’s reviewing

With these in mind, the webmasters back then were able to make a solid plan of attack.

What should I do to adapt to this algorithm?

Focus on Relevance but don’t Lose out on Quality

Based on the outcome gathered by SEOs back in February of last year, it was pretty evident that the update was targeting user intents in particular.

Here’s what Google is looking for from your pages:

High level of Expertise especially in topics that have essential effects to the quality of human life and lifestyle

Proof of Authority especially of the person writing the content and giving out information

Trust Signals from customers and affiliate businesses that will add more reputation to the website

Quality of the content in terms of context and its relevance to the search intent of the queries

Algorithm updates revolving around Google’s EAT guidelines affect industries whose primary requirement is having tons of expertise in the field.

Industries like health, law, government, science, and financial are some of those typically impacted. In retrospect, those in the automotive and animal care sector also require expertise and trust signals.

How Google defines authority and trust though is a very broad field. Add to the fact that Google can change their definitions easily.

Combining all these with how they judge content quality and relevance, it does make for a very complicated combination.

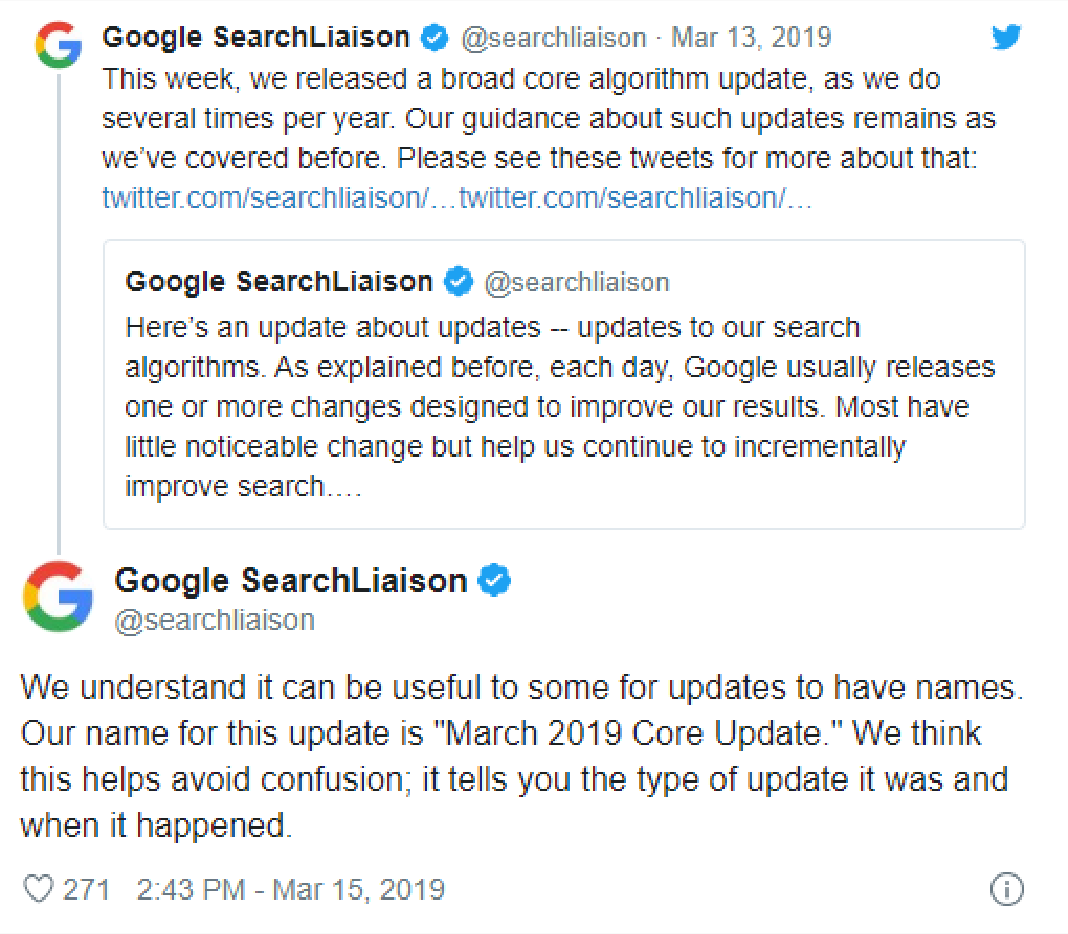

March 2019 Brand Core Algorithm Update (Florida 2)

March 2019 saw the first official update announced by Google. They even suggested a name for it: The March Core Algorithm Update.

Pubcon’s Brett Tabke received advanced notice from Google before the update was released. Due to this SEOs gave this Core update the affectionate title of “Florida 2” before it was actually rolled out.

The name Florida 2 came from the fact that the update rolled out during the Pubcon Florida SEO conference.

This was a significant one for two reasons:

Being called a “Core Update” meant that it made changes to how the algorithm itself works

This update affected Google search results in indexes in a Global scale

The last time Google confirmed the rollout of an update was back in 2018, and that was a Core Algorithm update as well.

What was the Impact of the update?

The Medic Update vehemently denied by Google to only affect websites in the field of health and medicine was still ringing true to SEOs despite the denial.

This March 2019 Core update was seen as a softening of that previous algorithm rollout.

Broadening horizons from the aftermath of the so-called Medic update AKA the Query Intent Update, the reference was expanded to industries outside health and medicine.

And thus the acronym YMYL was coined. YMYL stands for Your Money or Your Life. A label for online entities with whom the impact of the information being promulgated from heavily affect human life and/or lifestyle

Sites that belong to YMYL industries need to work harder than their non-YMYL counterparts in gaining EAT (Expertise, Authoritativeness, and Trust) signals.

This is because an incorrect medical prognosis which may lead to the recommendation of the wrong medicine may cause harm or loss of life.

A weighty error compared to say, providing inaccurate information on how to trim garden plants.

What should I do to adapt to this algorithm?

“Just prioritize the users, dammit!” is what my SEO specialist used to say whenever there’s a big update like this and he gets asked by the content people on how to adapt to it.

Well to be specific, it's more of prioritizing the users’ search intent. For this update, Google is actually rewarding websites that can meet their user’s intentions accurately.

Improve Your Content’s Search Intent

As always, research is the key. The type of questions your customers are asking is essential in order for you to build out content that accurately addresses them.

The playing field has greatly evolved from focusing on just one or two main keywords to covering every topic and query and making sure they have accommodating authoritative content.

Check out your competitors as well, especially those who are doing well and identify which ones you can emulate. Their gaps are important as well, since it would greatly help you know which opportunities you can capitalize on.

Furthermore, you’d also want to map out your keywords properly depending on the buying cycle your customers are in. Navigational, informational, and transactional keywords each have their own special place in your sales funnel.

Improve Your Website’s User Experience

Malte Landwehr, VP at Searchmetrics conducted an analysis that reflected results leaning towards most SEOs' belief at that time that Google has increased the weight of the user signals when measuring pages for rankings.

Some of these signals were page views per visit, time on site (session duration), and lower bounce rates.

And those websites that increased in rankings actually had better numbers in terms of those metrics compared to their online competitors.

These metrics are actually considered as the most difficult ranking factors to meet since it combines all the factors of a great user experience.

In short, meeting them actually means you gave your customers a very good user experience.

If a website gets its users to spend more time on their pages, or open more pages for each visit, and have them bounce with a lesser rate back to SERP, then that site surely has quality content.

June 2019 Broad Core Algorithm Update

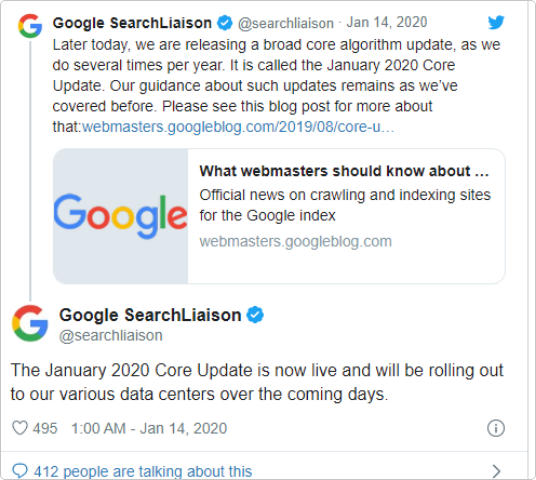

Uncharacteristically, Google announced via Twitter that an update is rolling out across Google’s data centers last June 2019.

It’s not like Google to share, much less announce their algorithm updates to SEOs online. However, the nature of this update demanded so. Especially since this is a Core Update.

And this marked the second time Google made changes to its core algorithm in 2019.

What was the Impact of the update?

One of the major industries hit hard by the March Core Update was the health sector. We saw tons of websites that were medical in nature either take a hit, or gain rankings.

Expanding on the E-A-T Guidelines

Being the second core update for 2019, the changes made in June expanded upon what the March core update focused on: Google’s E-A-T (Expertise, Authoritativeness, and Trust) Guidelines

To summarize the main aspect that changed between these two core updates: The March Update saw the E-A-T Guidelines as a guideline. The June Update made E-A-T an absolute requirement.

Especially for YMYL (Your Money or Your Life) Sites.

After determining the beneficial purpose of a page, the algorithm then categorizes it as either a YMYL site or a non-YMYL site.

A YMYL site requires higher levels of E-A-T signals before Google would even consider it for ranking. For non-YMYL sites, the requirements are a bit lenient.

Crackdown on Fake Information and News

One of the biggest and most controversial effects that this core update brought upon the online world was how The Daily Mail, a well-known tabloid newspaper in the UK saw massive decline in rankings.

The Daily Mail officially sought help from the Google Forums, stating how 50% of their search traffic disappeared seemingly overnight.

This made the UK Tabloid site an instant focus of case studies by SEOs who saw the massive hit it suffered.

Here are some of their findings:

The Daily Mail has a reputation of producing sensational and untrustworthy content as mentioned in social media circles

Wikipedia, a high domain authority website trusted by hundreds of millions of online users, listed Daily Mail as a “Deprecated Source” and advised its users to never use it as a reference much less quote it.

The Daily Mail site has heavy ad density and inappropriate placements that hinders the users from seeing their content. This results in bad user experience for their visitors, hence ‘tripping’ the Fred algorithm wire.

All in all, The Daily Mail not only lacked in meeting the Trust Signals required by Google from news and information websites which are part of the YMYL category, it also caught the ire of Fred due to its unethical system of online advertising.

Looking at Criteria Beyond EAT

By now you’ve probably realized that algorithms will continue to evolve and become sharper in terms of judging web pages.

Google’s algorithm no longer cares about matching keywords to web pages. Its operation has become more complex than that.

Ironically, that made SEO more simple for us digital marketers. For Google, it’s all about answering their users’ queries with the best possible content you could ever make for them.

The search engine giant will no longer favor websites who do not focus on providing direct answers to users who are looking for it, and in-depth information to those who need them.

A website loading quickly without 404s is irrelevant for Google if it doesn’t answer their users’ queries directly.

Importance of Testimonials and reviews

One of my most favorite marketing quotes came from Philip Kotler. He said: “The best advertising is done by satisfied customers.”

This has been a principle I have always kept close at heart. And it seems Google shares this belief as well.

The third factor in the E-A-T guidelines, Trust Signals, is a requirement best met by Testimonials and Reviews that come from your satisfied customers.

To understand this principle better, we need to look at the reverse model of the equation:

No matter how awesome your product is or its presentation, the value proposition that supports it, or how accurately it addresses your customers’ needs, one negative review from another customer could plant the seed of doubt on your prospective ones.

The bottom line? Your prospective customers will believe the words of your existing customers more than they will believe you.

What should I do to adapt to this algorithm?

The short answer: Nothing

I’m not saying there’s nothing you can do about it. What I’m saying, or rather what Google actually advised the webmasters at the time was: “Do Nothing”.

As a website owner you might find that unacceptable. As a digital marketer (we always have it worse), your clients will surely find that course of action appalling.

And for good reason, the website you’re managing just took a hit.

However, looking at it from another perspective, Google’s loaded advice is in fact a good course of action to take.

Whenever your website takes a hit from an algorithm update and starts to lose ranking, don’t panic and start by looking for what to rectify.

It would be more beneficial for you to instead take a look for reasons why Google doesn’t see your site as relevant anymore.

Oftentimes, it’s actually a more productive and efficient undertaking to worry less about what’s wrong with your website. Instead, gain a clear understanding on what makes your competitors who are ranking better than you more relevant than your site.

Why Google’s Guidelines is not an SEO Cheat Sheet

While Google has graciously provided us with a guideline document for Google Algorithms, it is worth saying that you shouldn’t be looking at this as a way to overtake your competition once you comply with what it says.

Sure, you can use the Guidelines as words to live by, sorta like “Google’s SEO Bible”.

But just like everything else in SEO: if everyone can access it, then everyone will be doing the same thing.

Use it as a rulebook. Check your website if it complies with the guideline set forth by Google. These are the right steps to take in utilizing Google’s algorithm guidelines.

It is a long document though, so it will surely take time to read through all of it.

Or, you can make it easier for yourself and just do what Google wants you to do. Which is by the way, the main gist of that whole document: Put your customers as your top priority and give them the best experience.

September 2019 Broad Core Algorithm Update

Back in September 2019, Google gave SEOs and site owners advance notice that a broad core algorithm update will be rolled out and it may take a few days to complete it.

While this is hardly a surprise to concerned parties, the fact that these changes will affect the core algorithm made everyone listening to Google’s signals hold their breath in anticipation.

What was the Impact of the update?

One of the more popular hangouts for SEOs, Black Hat World Forum had members reporting losses left and right after the update completely rolled out.

While this post looks to be a typical report of black hat marketer stating losses, and there were no specifications on what caused the ranking declines, losses attributed to links stood out like a sore thumb.

Broad core algorithm updates usually come with a wide range of improvements and changes, mostly focusing on relevance.

Though most of the discussions centered around links and how they were affected, it was highly likely that it is but one part of a huge number of changes that came with the update.

However, there were reports of a link based spam network which was well known for massive 301 redirects to expired domains collapsing after the algorithm updates rolled out.

Exploiting 301 redirects was a notorious black hat SEO technique at the time, believed to be exploiting a ranking loophole in Google.

The link based spam network has been ranking for quite some time and was then taken down a few days before the September 2019 update.

Cracking down on Expired domains and 301 Link Spams

A few days prior to Google announcing the core algorithm update, several websites already reported significant drops in search traffic. Upon close inspection, it seems that these results were caused by irrelevant 301 link redirects from expired URLs.

Back when it became public knowledge that referral links were being considered by Google in ranking pages, webmasters and SEOs clamored to gain as many links as they can on their websites.

This is the root of the methods and techniques we classify now as Off-page SEO.

Now, Google is sending a wake up call for everyone to be more careful in choosing the links for their pages as it may do more harm than good.

Changes to How Google Looks at No-Follow Links

Another game-changing announcement that Google made along with the September Core update concerned how they treated “No Follow” Links.

Before this update rolled out, Google saw no-follow links as an absolute request to not take that link’s route during a crawl.

With the September core algorithm update, the spiderbot that Google is using will be the one deciding whether to follow a link or not, or use the link’s ‘influence’ to decide ranking or not.

Google’s exact statement on this was:

“When no-follow was introduced, Google would not count any link marked this way as a signal to use within our search algorithms. This has now changed. the link attributes — sponsored, UGC and nofollow — are treated as hints about which links to consider or exclude within Search. We’ll use these hints — along with other signals — as a way to better understand how to appropriately analyze and use links within our systems.”

What should I do to adapt to this algorithm?

Since the changes stemming from this update are centered upon the nature of links and how Google views them, it is worth beefing up the quality of the links you are choosing to place on your site.

What are High Quality Links?

The process of link building has been a hot topic amongst SEOs who are looking to rank their pages. This is the other side of the coin in optimizing your site in order to rank it in SERP.

It is an essential factor in how search engines like Google judges your website’s pages in terms of ranking for the keywords you are focusing on in your on-page optimization efforts.

Links are one of the numerous ‘signals’ that Google looks for which shows your website is a quality source of information that is worthy of citation.

Here are three factors that determine the quality of the link:

The Authority of the Page Linking to your Site

The essential thought here: If a high authority page uses your pages in turn as reference to their content, then it must be relevant and impactful.

Referred to as PageRank, is a signal to Google that a page of authority has seen you as relevant, hence ‘passing’ their authority over to you.

The Overall Authority of the Website Linking to Your Site

Similar to Page Authority, the authority of a website is gauged by what Ahrefs call “Domain rating” or DR and what Moz refers to as “Domain Authority” or DA.

Both these ratings represent site-wide authority of the website linking to you, and is also an essential indicator for Google that tells them how authoritative your website is.

The Relevance of The Linking Content

The above ratings would never be considered by Google if the linking content was determined to be irrelevant in the first place.

For example: A backlink coming from Wikipedia.com would be a very worthy signal of authority for your website and the page its linking to. However, if the Wikipedia article linking to you does not match the topic you are discussing on your content, then you can consider that link useless.

It is worth stating that the main basis of all link building efforts is the relevance of the information on the link to the user or reader seeing it.

How to Build High Quality Links

Here are several off-page SEO strategies that you can utilize to gain high quality links from external websites to your site:

Create Content worth Linking To

Technically, ‘building links’ is not how the whole backlinking process is supposed to be done. Links are supposed to be generated naturally on your pages from external sites.

Hence the most powerful links you could ever gain for pages are called “ Natural Links”.

Now, while Google understands that getting links naturally will be a very hard endeavor especially for newly launched websites, it will still consider natural links to be the best indicator of authority.

This is why creating compelling, unique, and high quality content that other pages would naturally want to link to is your main goal for link building.

Gain Reviews and Mentions for your Product or Service

You know who the top influencers are in your business’ industry so send them an email and place your products or services in front of them. Especially if they have a huge following in social media.

Have them mention your brand in their facebook posts and tweets, or have a content that includes a link to your website’s pages.

This method is called link outreach. And it has been a time-tested formula for building links.

Ask Your Friends and Partners for Links

You can also utilize your circle of friends or your own personal network like the people you work with and have them link to your site.

Do remember though that the main essence of backlinking is relevance.

If you would have people give your site links, then they should be in the same general industry or niche as your business in order for their links to matter.

Link building is not a process that produces results overnight. It takes time, patience, and perseverance to succeed in off-page SEO. And it would take more of that to gain high quality links.

Nevertheless, resorting to shortcuts like buying links are technically against Google’s guidelines and can result in negative effects for your website’s SEO.

Right now, Google does not have an accurate method in its algorithm to differentiate between built, natural, and bought links.

However, based on the direction these updates are taking, the algorithm may have that capability sooner than we expect.

The BERT Update

The final week of October 2019 was rocked hard by an announcement coming from Google: A named update was just rolled out. And Google named this update themselves.

Introducing: B.E.R.T. an acronym that meant Bidirectional Encoder Representations from Transformers. And it’s scope of effect included all English-language queries globally.

What does BERT do?

Aside from annoying Ernie? Simply put, BERT is a huge leap that Google has taken in order for it’s algorithm to understand search queries better.

Saying “understand the search queries better” sounds like an understatement that users wouldn’t even bat an eye on.

So let’s state some examples:

A user who types in “CAD to USD” on the search bar will be met with a currency calculator in SERP

Another user who puts in a flight number will get an interface for monitoring a flight status in their SERP

Or yet another user who enters a stock symbol in the search bar will be met with a stock chart of that company on top of their results

Were you able to observe these features recently? Yeah, these are but some of the great steps Google has taken towards their goal of understanding how their users search online.

And it’s more than just an algorithm trying to “guess” what you are searching for while typing your queries on the search bar.

Now, BERT expands on these features by considering nuances in language and how they affect the meaning of search strings.

That sounds complicated so let’s uncomplicate it. Here are four great examples from the Google blog:

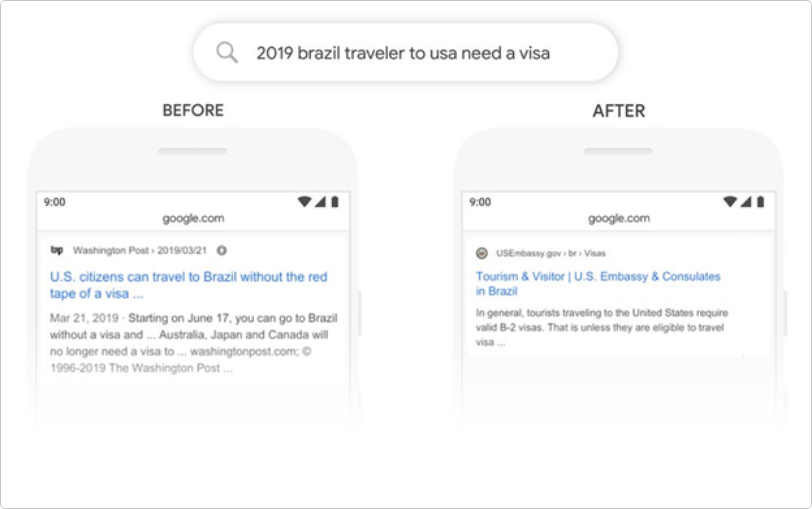

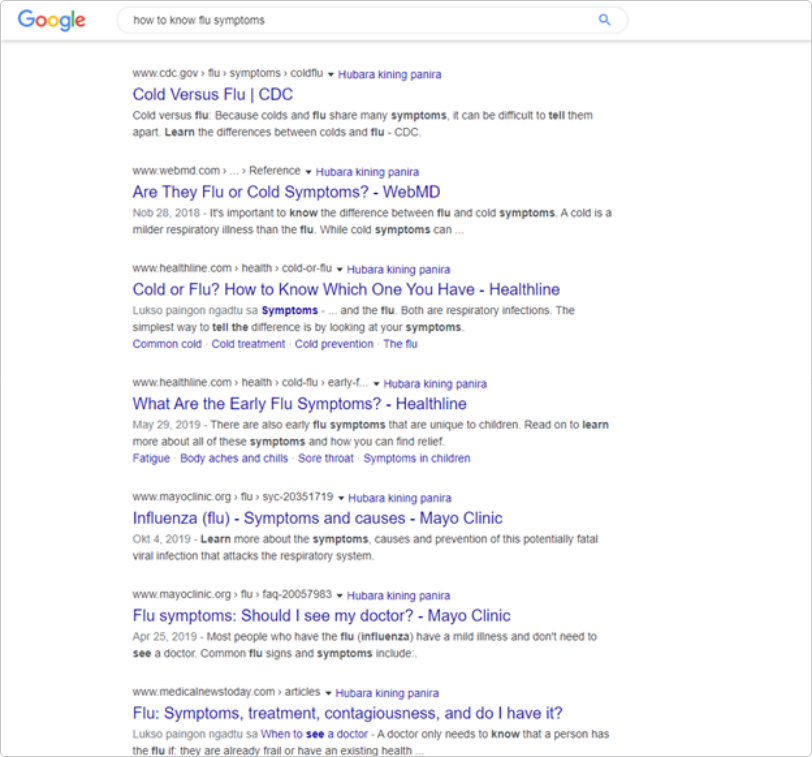

Search Query: 2019 brazil traveler to usa need a visa

The difference in results looks negligible, but it shows a very significant difference.

Before BERT, the results would have centered around the keywords Travel, Visa, US, Brazil. In which the algorithm would then look for the most relevant content it could find in its library, resulting in the result on the left.

But that’s not the right answer to our search query isn’t it? The searcher wasn’t looking into traveling to Brazil from the US, he or she wants to travel from Brazil to the US.

In this context, BERT used the connotation of the word “to” along with the word “need” and took that as signals to decide on the meaning of the search query as a whole.

Here’s another example.

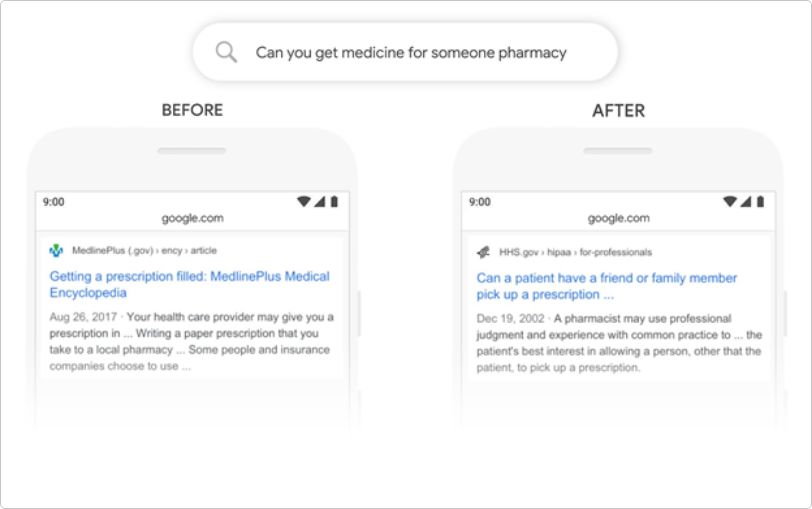

Search Query: Can you get medicine from someone pharmacy

As you can see in the example above, BERT took the meaning of the phrase “for someone” into consideration and decided that the search string meant buying medicine for another person.

Let’s look at one more example.

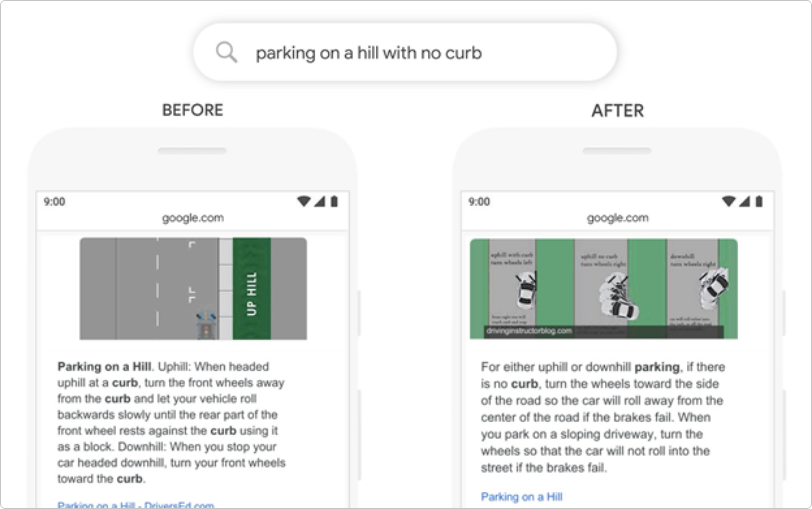

Search Query: Parking on a hill with no curb

Before BERT, Google’s algorithm would focus on the words ‘parking on a hill’ and ‘curb’ without taking the word ‘no’ into consideration.

Which in this case is very critical in defining the meaning of the whole query.

BERT would take note of this small variance in language and reconsider the context of the query based on these nuances.

How Big is BERT’s Scope

As per Google, BERT will have an effect on 1 out of 10 English search queries globally.

This includes Google search engines in countries with English as a second language like Google France and Google Singapore.

In terms of how big this update is, Google has confirmed that it’s the biggest update since the Rankbrain algorithm update was rolled out.

This means there’s a pretty good probability that it affects your website as well. If your website is new or needs further optimization then as your traffic grows, BERT will eventually affect your site.

What should I do to adapt to this algorithm?

Since the main updates that BERT revolves around concerns search queries, it is worth looking at the three types of queries that your customers use when performing a search.

And each of them fall under the three main categories of your customer’s buying cycle:

Informational Search Queries during the Awareness Stage

These queries as defined by Wikipedia, are search strings that cover a broad topic that may have thousands of relevant results.

When your customer enters these types of keywords in Google’s search bar, their intent is to gain information.

These are also keywords that your customers use when they are at the awareness stage of the buying cycle.

They are ‘aware’ that they have a need for the products or services you provide, but they don’t know you nor your brand as of yet.

Hence them looking for more information. They’re not looking for a specific website or brand yet, nor are looking to make a purchase immediately.

All they need at this point is an answer to a question they have or to learn how to do something they don’t know how to do yet.

Whether they find your website or not during this phase depends on how optimized your web pages are towards addressing their search intent.

Navigational Search Queries during the Consideration Stage

Are queries entered by your customers with the intent of finding a specific website or webpage.

These are commonly branded search queries in the sense that they know the carrier’s name of the product or service they are looking for

A good example of this is when your customers type in the word ‘facebook’ in the search bar rather than entering ‘facebook.com’ on the URL bar or clicking on a bookmark on their browser.

In essence, people typing in brand comparisons like “Nike vs. Adidas Running Shoes” in their search bar also fall under the navigational keyword category since these queries indicate that they are looking for the websites of these brands.

The stage of your buying cycle in which your customers use navigational is called the consideration stage.

It is at this stage where your customers already have a solution to their problem in mind, most probably gained from their time in the awareness stage, and are looking for other options.

This means how well your pages did during the awareness stage will greatly affect whether your brand is considered during this stage.

While the probability of conversion during this stage is low, it is at this phase where the critical thinking of your customers happens.

Hence, driving value towards making your products or services to be the best choice they have as a solution is paramount.

This specific aspect decides whether conversions will happen on the next stage or not.

Transactional Search Queries during the Decision Stage

These are queries that indicate a clear intent to complete a purchase or a transaction. I included the broad term ‘transaction’ since conversions don’t usually translate to a purchase.

Your conversion model may see your customer contacting you or booking your services, or at times downloading a free app.

Transactional search queries usually -but will not at all times, indicate the exact brand and/or product names like “Apple iPhone X”.

However, generic search queries with a buying intent can also be considered as transactional like “fuel efficient sedan”.

One easy way to identify transactional keywords is when telltale words are added to it. Words like “buy”, “purchase”, and “order” are some examples.

When your customers begin using these search queries, it is a good indication that they are at the final stage of their buying cycle: The Decision Stage.

In essence: They are ready to take out their credit cards and buy something or take the action that meets your conversion model.

However, don’t be complacent yet as your customers would most likely have some final considerations to make at this stage. The most common ones are pricing and shipping for ecommerce sites. For others, location.

Yes, search queries that are local and nature also fall under the transactional search queries category.

This is because the conversion point, at least at the online stage, means your customers getting your local business address and other relevant contact information like your phone number.

It is at this point where your online buying cycle ends and the rest of the conversion happens offline: As foot traffic to your brick and mortar business location.

The Google 2020 Core Update

After the BERT update back in October 2019, most SEOs and webmasters have been expecting a core update being rolled out soon.

Their forecast was right, as Google started out early in 2020 when it released the January Core Update.

What the Google Core Update Changed

Whenever a Google Core Update is rolled out, most if not all SEOs usually brace for impact.

This is due to how large the coverage of a core update has in terms of the changes it makes on Google’s algorithm.

A Google Core Update in essence, is a process of ‘reassessing’ how the algorithm is ranking its pages.

This means basic criteria like content quality, E-A-T Guidelines, and high quality backlinks are still being used and will continue to be used even for the core updates that will be rolled out in the future.

What usually changes during these updates is how Google ‘looks at’ these criteria in terms of determining what quality and authority is.

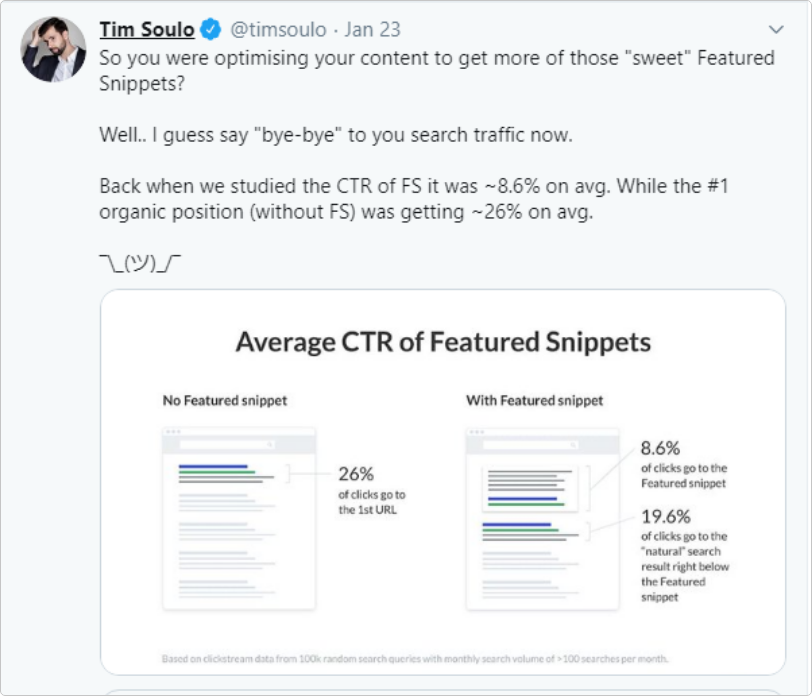

Google gave us an awesome example of how their Core Updates work: